作者:PorYoung 原始文档:https://static.poryoung.cn/aiida/ 发布时间:2020年10月

Basic Tutorial and Simple Examples for Aiida

Official Websites

Aiida installation

MiniConda Installation

Download Miniconda Miniconda installation pakage https://mirrors.tuna.tsinghua.edu.cn/anaconda/miniconda/

Install conda

1

2# the `xxx` is version code

bash ./Miniconda-[xxx].shReboot shell

Conda usage

配置清华(其他)源

1

2conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --set show_channel_urls yes创建虚拟环境

1

conda create -n [env_name] python=[python version]

激活/进入虚拟环境

1

conda activate [env_name]

退出虚拟环境

1

conda deactivate

Install Aiida

Use conda to install Aiida(Optional)(见aiida文档)

1

2

3conda create -n aiida -c conda-forge python=3.7 aiida-core aiida-core.services pip

conda activate aiida

conda deactivateUse conda to create Python vitrual env,and use

pipto install aiida(Optional)1

2

3

4# for example

conda create -n aiida python=3.8

conda activate aiida

pip install aiida-corePrerequisites Installation

1

2

3

4

5# you may try `apt update` in advance

# if generate `update error message`, try change a open source mirror

sudo apt-get install postgresql postgresql-server-dev-all postgresql-client

sudo apt-get install rabbitmq-server

sudo rabbitmqctl statusSetting up the installation

1

2# For maximum customizability, one can use verdi setup

verdi quicksetupsuccess info like this

1

2

3

4

5

6

7

8

9

10Success: created new profile `a`.

Info: migrating the database.

Operations to perform:

Apply all migrations: auth, contenttypes, db

Running migrations:

Applying contenttypes.0001_initial... OK

Applying contenttypes.0002_remove_content_type_name... OK

...

Applying db.0044_dbgroup_type_string... OK

Success: database migration completed.

Steps from Getting start page#Start Computation Services

rabbitmq-server -detached1

2

3

4

5

6

7

8

9

10

11

12DIAGNOSTICS

===========

nodes in question: [rabbit@ubuntu01]

hosts, their running nodes and ports:

- ubuntu01: [{rabbit,39056},{rabbitmqprelaunch2034,39422}]

current node details:

- node name: rabbitmqprelaunch2034@ubuntu01

- home dir: /var/lib/rabbitmq

- cookie hash: gHkRo5BrsxP/v89EnRf5/w==verdi daemon start 21

Starting the daemon... RUNNING

verdi check1

2

3

4

5

6✔ config dir: /home/majinlong/.aiida

✔ profile: On profile a

✔ repository: /home/majinlong/.aiida/repository/a

✔ postgres: Connected as aiida_qs_majinlong_7b54632a71306c771d8043bd779c519c@localhost:5432

✔ rabbitmq: Connected to amqp://127.0.0.1?heartbeat=600

✔ daemon: Daemon is running as PID 2202 since 2020-09-22 11:42:05install graphviz

1

2# for ubuntu

sudo apt install graphviz

Have a quick look at Basic Tutorial

setup computer

1

2

3verdi computer setup

# or setup with .yml file

verdi computer setup --config computer.ymlcomputer.yml:```yml

1 | --- |

setup code

1

2

3verdi code setup

# or setup with .yml file

verdi code setup --config code.ymlcode.yml:```yml

1 | --- |

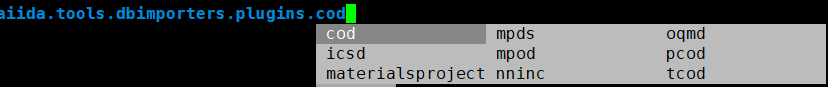

how to check plugin

1

2

3verdi plugin list

#or

verdi plugin list [spec]how to list process

1

verdi process list -a

Quantumespresso Installation

Setup of Intel Compiler

Download

Intel® Parallel Studio XEand follow the guide to install.set environment variables in

~/.bashrc(default path is/opt/intel)1

2

3

4

5

6

7

8

9

10# intel and mkl

source /opt/intel/bin/compilervars.sh intel64

source /opt/intel/mkl/bin/mklvars.sh intel64

export MKL_LIB_PATH=/opt/intel/mkl/lib/intel64

export PATH=/opt/intel/bin:$PATH

# mpi and others

source /opt/intel/impi/2018.4.274/bin64/mpivars.sh intel64

export MPI_HOME=/opt/intel/compilers_and_libraries_2018.5.274/linux/mpi

export PATH=$MPI_HOME/bin:$PATH

export LD_LIBRARY_PATH=$MPI_HOME/lib:$LD_LIBRARY_PATHsource or relogin to take effect

Setup of QE

login

rootuser :question:Other users are sopposed to install qe, but somehow it can not find MKL lib when usesudo ./configureDownload QE release version

use

./configureto check environmentthen configure to install

1

./configure --prefix= CC=mpiicc CXX=icpc F77=ifort F90=ifort FC=ifort MPIF90=mpiifort

if satisfied with your expectaion then

makeandmake install.

aiida-quantumespresso plugin

IMPORTANT

Please check version of aiida-core is 1.4.0 or higher.

install aiida-quantumespresso plugin from github

1 | git clone https://github.com/aiidateam/aiida-quantumespresso |

setup of a computer and other settings

Setup of a computer named

TheHiveand all the later calculation will implement on it1

2

3# -L [computer name] -w [work directory]

verdi computer setup -L TheHive -H localhost -T local -S direct -w `echo $PWD/work` -n

verdi computer configure local TheHive --safe-interval 5 -nHow to check codes,computers and plugins in aiida

1

2

3

4

5# check aiida plugin/computer/code list

# such as `verdi plugin list aiida.calculation`

verdi code list

verdi computer list

verdi plugin listPseudopotential families Before you run a calculation, you need to upload or define the pseudopotential

1

2

3

4# upload

verdi data upf uploadfamily [path/to/folder] [name_of_the_family] "some description for your convenience"

# check list

verdi data upf listfamiliessee what is and how to create pseudopotential families in official docs

Run pw example

The plugin requires at least the presence of:

1 | An input structure; |

setup code

quantumespresso.pwpw_code.yml:```yml

label: “pwt” description: “quantum_espresso pw test” input_plugin: “quantumespresso.pw” on_computer: true remote_abs_path: “/home/majinlong/qe_release_6.4/bin/pw.x” computer: “TheHive”

1 |

|

Copy example code here provided [^1] or

download the official example(watch out) [^2] file to test

run with verdi

1

verdi run example

run with python

1

python example.py

run in verdi shell

1

2verdi shell

> import examplecheck the documentation here to understand mappings from input files to python dict and how to define your inputs.

Retrieve results

run with

runget the CalcJobNode PK

1

verdi process list -a

verdi shell

1

2from aiida.orm import load_node

calc = load_node(PK)results in

calc.res

run with

submit1

2calc = submit(CalcJob)

calc.res

Run ph example

setup code

quantumespresso.phas same withpwph_code.yml:```yml

1 | --- |

use example code here [^3] not official code(if no update version)

run code

1

verdi run ph_example.py

the same way to retrieve result

Run nscf example

- run example code here [^4]

- the same way to retrieve result

Run bands example

Almost the same with nscf example code, just change nscf in updated_parameters['CONTROL']['calculation'] = 'nscf' to bands [^5]

1 | # generate output_bands graph |

Run q2r example

setup

q2r.xcode here is theq2r.yml:```yml

1 | --- |

run example code here [^6](like _ph_ calc, you need to load _ph_ calc node)

Notice the output:

SinglefileDataA file containing the force constants in real space(in work dir namedreal_space_force_constants.dat)

About Seekpath module

Here is a example that how to set kpoints with seekpath.

Notice the return result is a KpointsData type, which can be used as kpoints directly

1 | ```bash |

Two dimensions structure data

You may set the k-mesh and kpoints manually when the structure data is a two dimensions one by using code like this.

About import and export data

Local data files

show or export StructureData

1

2

3verdi data structure show --format ase <IDENTIFIER>

verdi data structure export --format xsf <IDENTIFIER> > BaTiO3.xsf

# xcrysden --xsf BaTiO3.xsfimport

xyzdata1

verdi data structure import aiida-xyz test.xyz

import

cifdata and transform to structure1

2

3

4

5

6

7verdi data structure import ase 9008565.cif

# or import as cifData

verdi data cif import test.cif

# or directly import from file in verdi shell

> CifData = DataFactory('cif')

> cif = CifData(file='/path/to/file.cif')

> cif.store()use

get_structuremethod to get structure aiida supports.1

2cif_struc = load_node(cif_data_pk)

structure = cif.get_structure()

Cloud Databases

- supported data

- query structure data from cloud databases

Import tools

1

from aiida.plugins import DbImporterFactory

Material Project

1

2

3

4MaterialsProjectImporter = DbImporterFactory('materialsproject')

m = MaterialsProjectImporter(api_key=`Your_API_KEY`)

query_dict = {"elements":{"$in":["Li", "Na", "K"], "$all": ["O"]}, "nelements":2}

res = m.query(query=query_dict,properties='structure')Cod

external (online) repository COD

1

2

3

4

5

6

7

8CODImporter = DbImporterFactory('cod')

importer = CODImporter()

args={

'element': 'C',

'title': '',

'number_of_elements': 3,

}

query_results = importer.query(**args)

Structure data group

create a group

1

verdi group create [name]

add node to group

1

2

3

4verdi group add-node [pk]

# add with python

group = load_group(label='two-dimension')

group.add_nodes(load_node({PK}))query structure in a group

1

2

3

4

5

6

7qb = QueryBuilder()

qb.append(Group, filters={'label': 'test'}, tag='group')

qb.append(StructureData, with_group='group')

# Submitting the simulations.

for structure in qb.all(flat=True):

print(structure.pk)

Export profile data

1 | ```bash |

Write workflow for yourself

refer to a example here provided for pw and bands calculation

Some concepts and commands about aiida

calculation functions require all returned output nodes to be unstored

work functions have exactly the opposite required and all the outputs that it returns have to be stored, it cannot create new data

go to work dir

1

verdi calcjob gotocomputer [pk]

Issues :question:

import data

1

2verdi data structure import aiida-xyz 4afd2627-5695-49ee-8e2f-2d1f49bff3bb_structure.xyz

> Critical: Number of atom entries (7) is smaller than the number of atoms (8)

Appendix

[^1]: pw example code

1 | ```py |

[^2]: the current offical example code(v-3.1.0) isn’t compatible with the latest aiida version, neither doesn’t work with its command-line test tool, change it as follow

1 | ```py |

[^3]: ph example code

1 | ```py |

[^4]: nscf code

1 | ```py |

[^5]: bands code

[^6]: q2r code

1 | ```python |